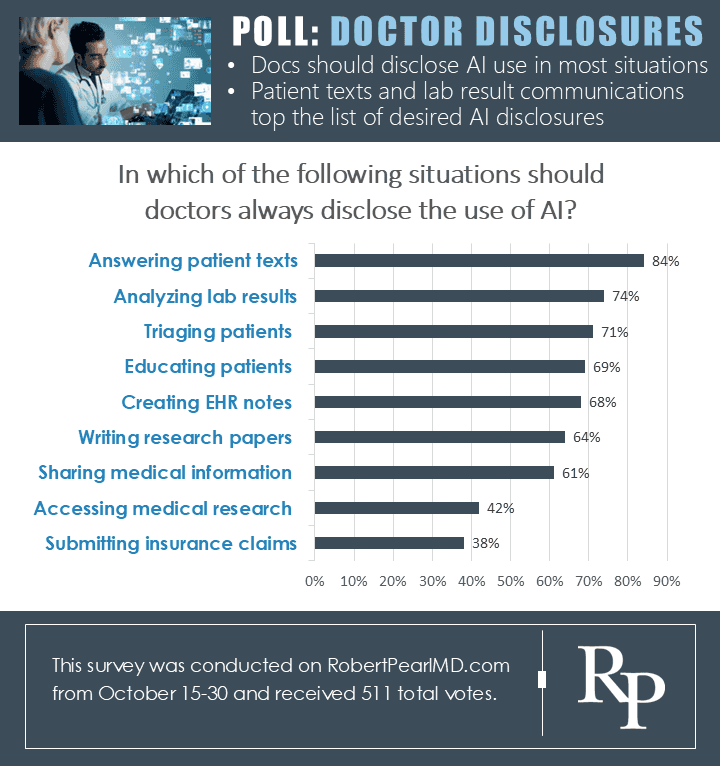

Today, 1 in 6 Americans are using artificial intelligence to answer medical questions. Clinicians, meanwhile, are relying on AI as a healthcare tool more and more. With that context, I asked readers to share their thoughts the responsibility of doctors to disclose their use of AI in medical situations. Here are the results:

My thoughts: The survey responses reveal a clear distinction: in areas directly impacting patient care and academic work, most readers believe disclosure of AI use is essential. However, when AI is used as a tool solely for the doctor’s background information or administrative tasks like insurance claims, most respondents feel disclosure is unnecessary. This feedback underscores how deeply trust matters in the relationships between clinicians, patients and fellow medical professionals.

Any deception—real or perceived—weakens the bond between healthcare providers and patients, eroding trust. While research has shown that GenAI can generate medical diagnoses that are four times more accurate and nine times more empathetic than those from human doctors, patients may still feel misled if they assume an AI-generated communication has come directly from a doctor. Healthcare leaders would be wise to consider these insights carefully when deciding how to use AI in patient interactions.

Thanks to all who voted! To participate in future surveys, and for access to timely news and opinion on American healthcare, sign up for my free (and ad-free) newsletter Monthly Musings on American Healthcare.

* * *

Dr. Robert Pearl is the former CEO of The Permanente Medical Group, the nation’s largest physician group. He’s a Forbes contributor, bestselling author, Stanford University professor, and host of two healthcare podcasts. Check out Pearl’s newest book, ChatGPT, MD: How AI-Empowered Patients & Doctors Can Take Back Control of American Medicine with all profits going to Doctors Without Borders.